Artificial Intelligence, or A.I., is an element that has been a staple in science-fiction for about as long as science-fiction has been around. But in the real world, especially when it comes to the general consumer market, artificial intelligence isn’t all that prevalent. At the end of March, word popped up that Apple’s purchase of Siri in the previous year would make a huge impact in the next version of iOS, better known as iOS 5. With the purchase of Siri, and with Apple’s implementation of the acquired technology, artificial intelligence may very soon become a household name.

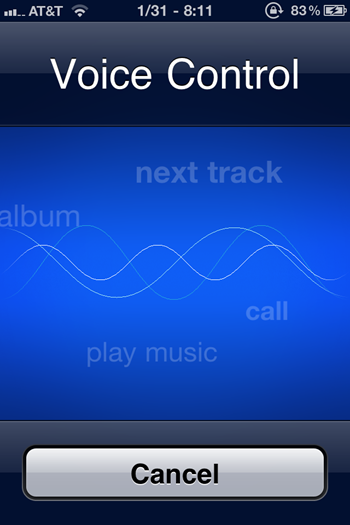

Let’s run a quick refresher course. As of right now, the use of voice commands in iOS is pretty simple. You’re able to play a song or start a voice call with just your voice, which is pretty neat in of itself. But, voice calling isn’t a new feature by any means, and while launching a song by asking your device to do so is pretty cool, it’s probably not a widely used service. Siri created a virtual personal assistant for the iPhone before they were bought up by Apple, and their artificial intelligence and assistance technology could go a long way in iOS, if implemented correctly.

But, that begs the initial question: if people aren’t using the already offered voice commands in iOS, does simply adding more mean that people will jump on the technology? After all, using a voice command to launch a song or call someone isn’t a hidden feature within iOS by any means, and if people aren’t using it it’s because they don’t want to use it. So, giving them the ability to, for example, launch their favorite application with their voice doesn’t mean that the technology is new, just that there’s one more thing to launch.

So what’s going to be the difference? How can Apple make Siri’s technology part of iOS 5 so much so that when someone gets their hands on the mobile platform, and they start using it for the first time, they’ll start talking all about artificial intelligence and how great it is. And, if Apple can pull this off as well as we all assume/hope/dream they can, will this mean that the next iPhone will be more of a personal assistant device, rather than just a smartphone?

And how high does Apple have to aim here? There’s no denying that Google’s voice implementation within Android is better. The integration with the software is top-notch, and the fact that speech-to-text is integrated within the software right out of the box (depending on your version of Android, of course) is another plus. Does Apple just need to best Google’s Android? Or, does Apple need to keep on aiming for the clouds, in hopes that by the time the next version of Android hits devices, it’s not already out-dated?

If artificial intelligence, in the way that it is implemented within iOS, is a feature that will become integral to our smartphones and mobile devices for the future, what other things would you like to see? How can Apple push voice integration within iOS and make you interested in the platform with these new features? Let me know in the comments what you think.