Following announcements at Google I/O earlier this month, new Google Lens features are beginning to roll out to users this week.

New Google Lens filters for Dining and Translate are hitting Android and iOS this week. Android users will need ARCore to access them, and they can be found in the Photos and Assistant apps and in many first-party camera apps. On iOS, you'll be able to find these features in the Google Photos app and the Google app.

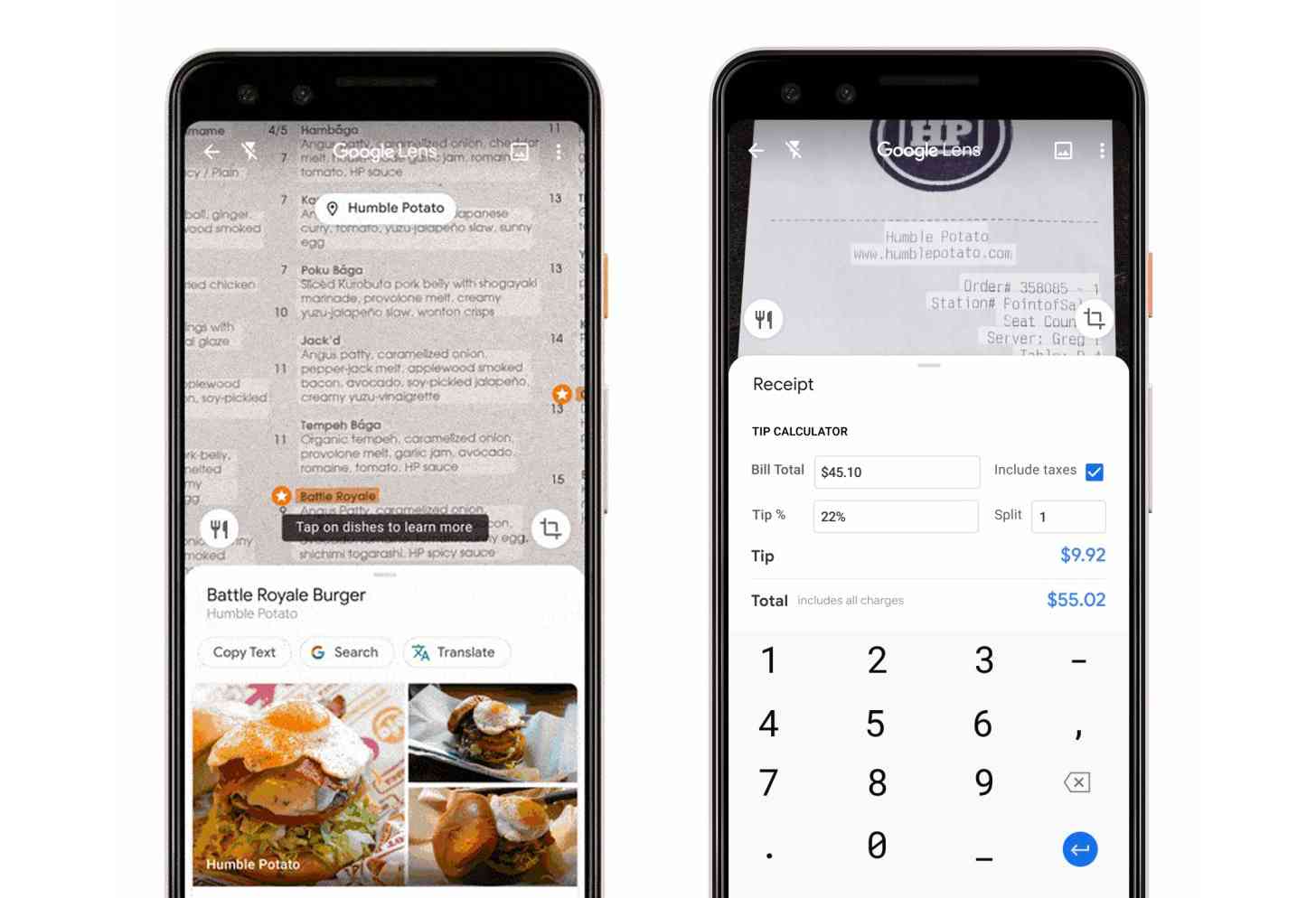

The Dining filter will let you use Lens to highlight which dishes are popular on the physical menu. You can tap on a dish using Lens, see what it looks like, and see what people photos and videos from Google Maps. Pointing your camera at the receipt will help you calculate the tip and split the bill, too.

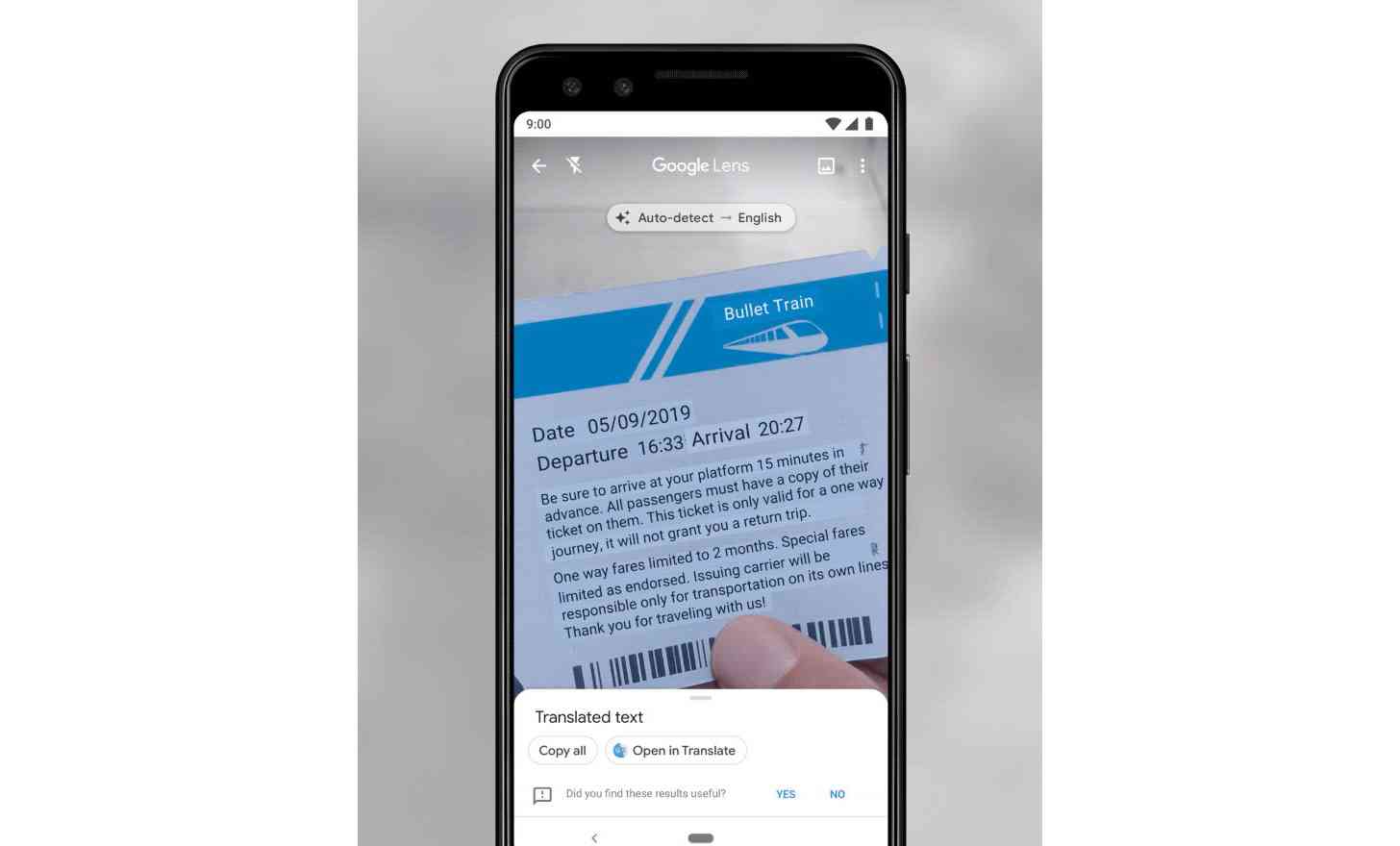

The Translate feature works about how you'd expect, letting you point your camera at text and use Lens to detect and translate the language. The translation will appear directly on top of the original words. This feature supports more than 100 languages.

These features have some pretty specific use cases, so they may not be things you use every single day, but they could come in pretty handy in the instances that you do need them. For example, the Translate could be useful for quickly translating some signs when you're in a foreign land.